In its third and final year, the UNH International Research Experiences for Students (IRES) program has selected eight students to conduct research in the HCI Lab at the University of Stuttgart, under the supervision of my colleague Albrecht Schmidt. The UNH IRES program is funded by the National Science Foundation, Office of International and Integrative Activities, and we are grateful for their support. The eight students were each assigned to a group within the HCI Lab and participated in the research activities of that group.

In its third and final year, the UNH International Research Experiences for Students (IRES) program has selected eight students to conduct research in the HCI Lab at the University of Stuttgart, under the supervision of my colleague Albrecht Schmidt. The UNH IRES program is funded by the National Science Foundation, Office of International and Integrative Activities, and we are grateful for their support. The eight students were each assigned to a group within the HCI Lab and participated in the research activities of that group.

I asked each of the students to write a one-paragraph report on their summer experience in Stuttgart, focusing on their research, and on their cultural experience. Here’s the first installment of these brief reports, where we look at some of the research conducted by the students.

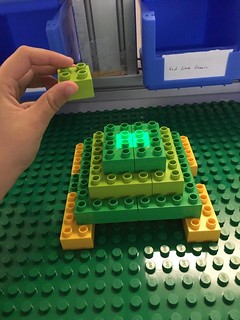

Taylor Gotfrid worked on using augmented reality in assistive systems:

During my time here I learned about experiment design and augmented reality. Over this summer I’ve been working on designing and conducting a user study to determine whether picture instructions or projected instructions have better recall for assembly tasks over a long period of time. This experiment assesses which form of media would lead to fewer errors, faster assembly times, and better recall over a span of three weeks. The picture above is of the projector system indicating where the next LEGO piece needs to be placed to complete the next step in the assembly process.

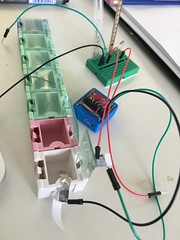

Dillon Montag worked on tactile interfaces for people with visual impairments:

HyperBraille: I am working with Mauro Avila on developing tactile interfaces for people with visual impairments. Our tool developed this summer will allow users to explore scientific papers while receiving both audio and tactile feedback. We hope this new tool will allow people with visual impairments to enhance their understanding and navigation through papers.

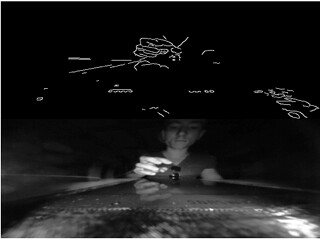

Anna Wong worked on touch recognition:

For my project with the University of Stuttgart lab, I was tasked with using images like the one on the left to detect the user’s hand, and then classify the finger being used to touch a touch screen. This involved transforming the images in a variety of ways, such as finding the edges using a canny edge detector as in the top image, and then using machine learning algorithms to classify the finger.

Elizabeth Stowell worked on smart notifications:

I worked with smart objects and the use of notifications to support aging-in-place. I enjoyed building prototypes for a smart pillbox, sketching designs for a smart calendar, and exploring how people who are elderly interact with such objects. In these two months, I learned a lot about notification management within the context of smart homes.